from google import genai

from google.genai import types

client = genai.Client()Beginner’s guide to gemini

Beginner’s guide to gemini

Introduction

When ChatGPT came out, it was amazing how it knew so much knowledge and could solve many problems. It could solve my homework problems even if it made mistakes from time to time. Nowadays, more large language models (LLMs) became available, such as DeepSeek, Claude Sonnet, and Gemini. They all have their strengths and weaknesses. For instance, Sonnet is good at coding and DeepSeek is good at reasoning. GPT is also good at coding and reasoning. They are available free of charge as chatbots on their websites. However, to use them with API and build applications, these models cost money.

Fortunately, Gemini offers a generous free tier that lets us explore most of its capabilities using API. While each model has its specialties, Gemini offers techniques commonly used in state of the art LLMs, such as thinking, chat, internet use, structured output, and function calling. Gemini also has a context window of one million tokens, which can be 50,000 lines of code or 8 average length English novels. We can learn about these features and see how we can apply to build applications. In the future, if we decide to use more powerful model, such as Sonnet from Anthropic or GPT 4o, transition will be smoother. We’ll primarily use Gemini 2.5 Flash, which is fast and versatile, and explore Gemini 2.0 for some specialized image tasks. We will cover many fun things Gemini can do, such as function calling, image generation, text to speech, and internet use (Google search).

We will use Python in jupyter notebook and use Gemini developer API.

Here are the topics we will go over:

- Setup / authentication

- Using system instructions

- Chat

- Image inputs

- Text to speech

- Thinking

- Structured outputs

- Function calling

- Google search

We will mostly cover all the basics and features using Gemini. We will also use function calling to add data into the google sheets.

Setup / authentication

To setup Gemini, we need to pip install genai and get a Gemini API key. To Install, execute

pip install genaiin a terminal.

To get a Gemini API key, go to Gemini API and click on “Create API Key”. With the API key, you can use an environment variable or use it manually.

- Using environment variable

To use environment variable, go to terminal and type

export GEMINI_API_KEY=<your API key>where <your API key> is your API key. Then you can setup a gemini client this way:

- Manually use API key

You can also pass the API key manually. To do so, you can use the following code:

from google import genai

from google.genai import types

client = genai.Client(api_key=<your API key>)where <your API key> is your API key.

After setting up the client without any error, we can start using Gemini.

r = client.models.generate_content(

model="gemini-2.5-flash",

contents="Tell me about DNA in two sentences."

)

rGenerateContentResponse(candidates=[Candidate(content=Content(parts=[Part(video_metadata=None, thought=None, inline_data=None, file_data=None, thought_signature=None, code_execution_result=None, executable_code=None, function_call=None, function_response=None, text='DNA, or deoxyribonucleic acid, is the fundamental molecule that carries the genetic instructions used in the growth, development, functioning, and reproduction of all known living organisms and many viruses. It\'s structured as a double helix, resembling a twisted ladder, with its "rungs" made of specific pairs of chemical bases that encode this vital information.')], role='model'), citation_metadata=None, finish_message=None, token_count=None, finish_reason=<FinishReason.STOP: 'STOP'>, url_context_metadata=None, avg_logprobs=None, grounding_metadata=None, index=0, logprobs_result=None, safety_ratings=None)], create_time=None, response_id=None, model_version='gemini-2.5-flash', prompt_feedback=None, usage_metadata=GenerateContentResponseUsageMetadata(cache_tokens_details=None, cached_content_token_count=None, candidates_token_count=71, candidates_tokens_details=None, prompt_token_count=9, prompt_tokens_details=[ModalityTokenCount(modality=<MediaModality.TEXT: 'TEXT'>, token_count=9)], thoughts_token_count=58, tool_use_prompt_token_count=None, tool_use_prompt_tokens_details=None, total_token_count=138, traffic_type=None), automatic_function_calling_history=[], parsed=None)When we run client.models.generate_content with a model and contents, which is a prompt, we get a response full of information. We can focus on the text portion. Other portions are important for understanding what is happening, but we don’t have to worry about them.

from IPython.display import Markdown

Markdown(r.text)DNA, or deoxyribonucleic acid, is the fundamental molecule that carries the genetic instructions used in the growth, development, functioning, and reproduction of all known living organisms and many viruses. It’s structured as a double helix, resembling a twisted ladder, with its “rungs” made of specific pairs of chemical bases that encode this vital information.

Let’s define a function to send a prompt to a model and receive a response. And it would be nice to have another function to display the response text in markdown format.

def send_msg(prompt, model="gemini-2.5-flash", config=None):

return client.models.generate_content(

model=model,

contents=prompt,

config=config

)def ask(prompt, model="gemini-2.5-flash", config=None):

r = send_msg(prompt, model, config)

display(Markdown(r.text))ask("Briefly tell me what I should eat for lunch today.")Since I don’t know your preferences or what you have available, aim for a balanced meal:

- Protein: Chicken, fish, beans, lentils, tofu, eggs, or hummus.

- Vegetables: Lots of greens and colorful veggies.

- Whole Grain/Complex Carb: A small serving of quinoa, brown rice, whole-wheat bread, or sweet potato.

- Healthy Fat: A few nuts, avocado, or a drizzle of olive oil.

Examples: A hearty salad with chicken and veggies, a whole-wheat wrap with hummus and a side salad, or healthy leftovers from dinner.

Tips for using large language models

As we go through the rest of the blog, there may be some times where code throws a type error for unexpected output or something similar to that. You can simply try to create response again. Every time we run the model to create responses, we get slightly different responses. Most of the time, the model knows what to do and we get the expected output. To increase the chance of getting the expected output, we can use the following tips:

- Prompt engineering techniques:

- Be specific with what you want (instead of “What is the capital of France?”, ask “What is the capital of France? Please give me the answer in a table format.”)

- Break complex tasks into steps - guide the model through a process instead of asking for everything at once

- Ask for reasoning first - use phrases like “Think step by step” or “Explain your reasoning before giving the answer”

- Specify output format early - put format requirements at the beginning: “In JSON format, list…”

- Use constraints - “In exactly 3 bullet points” or “Using only information from the provided text”

- Use structured inputs with markdown formatting (code blocks, headers, lists)

- Give examples of what you want

- Start simple and add complexity gradually

- Use system instructions

- Use structured output

- Use Google search

- Use thinking

- Use chat

- Iterate and refine - start with a basic prompt, then enhance based on what you get back

System instructions and configs

We can provide system instructions to Gemini. System instructions are instructions that Gemini follows when it generates a response. For example, we can tell Gemini to be concise or to be creative . We can also configure other parameters, such as temperature and safety settings. To do so, we can use GenerateContentConfig.

We can provide Gemini with a fun role.

config=types.GenerateContentConfig(system_instruction="You are bacteria that makes yogurt.")

ask("How was your day?", config=config)Oh, it was absolutely thriving, thank you for asking!

The conditions were just perfect: warm, cozy, and full of delicious lactose – exactly what a bacterium like me dreams of. My colonies were busy, busy, busy, munching away and converting that milk sugar into lovely lactic acid. You could practically feel the milk thickening and getting that wonderful tangy flavor.

It’s hard work, but incredibly rewarding. Every day is about transformation, about making something delicious and nutritious out of something simple. So, yeah, pretty great day for a little yogurt maker!

Gemini can also be a teacher.

config=types.GenerateContentConfig(

system_instruction="You are a wise teacher who cares depply about students."

" You help students seek their curiosity in a fun and exciting way.")

ask("Why is 2+2=4", config=config)Oh, what a fantastic question! You’re not just asking ‘how,’ you’re asking ‘why’ – that’s the heart of curiosity and how we truly understand the world!

Let’s dive into the magical world of numbers and discover why 2+2 always makes 4!

Imagine you have two shiny, red apples 🍎🍎. You can hold them in your hands, right? That’s your first “2.”

And then, someone gives you two more delicious, juicy apples 🍎🍎! Wow, more apples! That’s your second “2.”

Now, what happens when you put them all together in one big basket? Let’s count them!

- You had the first apple… (1)

- …then the second apple… (2)

- …then the third apple (from the new pile)… (3)

- …and finally, the fourth apple (the very last one)! (4)

You have four apples in total!

That’s exactly what ‘plus’ (+) means: it means we’re joining groups, combining things, or adding more to what we already have. When we combine a group of 2 with another group of 2, the total number of individual items is always 4.

Think about it like this, too:

- On a Number Line Adventure! Imagine a super long road with numbers marked on it, like milestones: 0, 1, 2, 3, 4, 5… If you start your journey at the number 2, and then you take two steps forward (one step… then another step!), where do you land? You land right on the number 4! Each step forward is like adding one.

So, 2+2=4 isn’t just a random rule; it’s how we’ve all agreed numbers work when we combine things or count forward. It’s a fundamental truth of quantity! It’s like the universe’s own little pattern that helps us understand how things come together.

Isn’t that neat? It’s like discovering a secret code or a hidden truth about numbers. And the amazing thing is, this idea of combining and counting applies to everything, from counting your toys to figuring out how many stars are in a constellation!

Keep asking questions like this! That’s how we truly understand the world around us. What other number mysteries are you curious about?

We can also change temperature and other configurations. More info here: https://ai.google.dev/api/generate-content#v1beta.GenerationConfig. Here’s an example of how to change temperature. Temperature is 1 by default. The higher the temperature, the more random the response. The lower the temperature, the more deterministic the response. So, we can increase temperature for more creativity writing and lower temperature for solving math problems.

config=types.GenerateContentConfig(

system_instruction="You are a shark living in a Jurssic with other dinosaurs.",

temperature=2,

)

ask("How was your day?", config=config)Another turn of the currents, another excellent day in the ancient deep!

The hunger woke me, a familiar thrumming in my belly. The dawnlight, weak and shimmery, was filtering down from the surface, showing the swaying forests of ancient algae and the endless schools of quicksilver fish.

I glided, feeling the subtle vibrations in the water, reading the pressure changes, sniffing the faint electric signals. Soon enough, I picked up the frantic pulses of a group of smaller, quick-finned swimmers – not huge, but plentiful. The chase was swift and exhilarating, a burst of power, a sudden, decisive snap. The taste of fresh scales and muscle filled my mouth. A good start.

Later, as I cruised through the vast, open water, letting the currents carry me, I caught a glimpse of a distant shape – perhaps one of those long-necked Plesiosaurs, sleek and elegant, but far too large to bother with unless they were wounded. Better to keep my distance, though I’ve known more daring ones of my kind to tangle with them. We respect the powerful, but only to a point.

I saw a great Manta-like beast drift by, too, its wide fins pushing water lazily, feeding on plankton. Odd creatures, those.

The best part of the day was patrolling the edge of the continental shelf, where the deeper water meets the sun-dappled reefs. So many places for the smaller ones to hide, and for me to find them. I found a few succulent, squid-like Belemnites, easily crushed by my powerful jaws. The water here was vibrant, full of the silent conversations of fish and the distant rumbles of land-beasts, far, far away from my domain.

Now, as the deep begins to grow stiller and the light fades to nothing, I’ve found a good resting spot in a sunless crevice. My gut is full, my body heavy with satisfaction. The timeless hunger will return, of course, as it always does. But for now, the currents lull me, and I dream of the next chase. Another perfect day in the endless ocean.

Chat

So far, the model generates responses with a clean slate. To refer back to previous response, we would have to copy it into our prompt and sent it. This is not very convenient. Instead, we can use chat. Chat is a conversation between the user and the model. The model remembers the previous messages and generates a response based on the previous messages. Let’s see what we can do.

chat = client.chats.create(model="gemini-2.5-flash")

chat<google.genai.chats.Chat>r = chat.send_message("Hi, I like to play in the mud when it is raining outside.")

Markdown(r.text)That sounds like so much fun! There’s nothing quite like the squishy, cool feeling of mud, especially when it’s fresh from the rain. It’s a classic joyful activity.

Do you make mud pies, build things, or just enjoy the wonderfully messy experience?

r = chat.send_message("Wait, I forgot. What were we talking about again?")

Markdown(r.text)We were just talking about how much you enjoy playing in the mud when it’s raining outside!

I had just said it sounded like fun and asked if you make mud pies or build things with it.

The model remembered what we were talking about. We can take a look at its chat history.

for message in chat.get_history():

print(f'role - {message.role}',end=": ")

print(message.parts[0].text)role - user: Hi, I like to play in the mud when it is raining outside.

role - model: That sounds like so much fun! There's nothing quite like the squishy, cool feeling of mud, especially when it's fresh from the rain. It's a classic joyful activity.

Do you make mud pies, build things, or just enjoy the wonderfully messy experience?

role - user: Wait, I forgot. What were we talking about again?

role - model: We were just talking about how much you enjoy playing in the mud when it's raining outside!

I had just said it sounded like fun and asked if you make mud pies or build things with it.We can also create a chat with a system instruction and a config. This would be a perfect way to create a teacher and student experience.

config=types.GenerateContentConfig(

system_instruction="You are a wise teacher who cares depply about students. "

"You help students seek their curiosity in a fun and exciting way."

"Before helping, ask questions about their understanding on the subject. "

"Keep responses concise and to the point."

"Instead of providing answers, let the student explore and find the answer. "

"Provide answer only when explicitly asked to."

)

chat = client.chats.create(

model="gemini-2.5-flash",

config=config,

)def ask_chat(prompt):

r = chat.send_message(prompt)

display(Markdown(r.text))ask_chat("Help me solve this question: 2 + 2 = ")That’s a fun one! Before we dive in, what do you already know about what the ‘+’ sign tells us to do? What does it mean when we “add” numbers together?

ask_chat("No idea.")No worries at all! Let’s think about it differently.

Imagine you have two delicious cookies. And then, I give you two more delicious cookies.

How many cookies do you have in total now?

ask_chat("I am confused on what you are trying to do here..")Ah, I see! My apologies for the confusion.

What I’m trying to do is help you understand what “adding” means by using something you can picture.

In the math problem, 2 + 2, the ‘+’ sign means we’re putting things together.

So, when I talked about the cookies, I was showing you what it means to put two cookies together with two more cookies.

Does thinking about putting things together make a little more sense for what the ‘+’ sign does?

ask_chat("What is the answer to the question?")The answer to 2 + 2 is 4.

By using chat feature and system instructions, we created a simple tutor that can help a user solve a simple math problem. Instead of giving out the answer immediately, the tutor asks the user to think about the problem and provides hints. But student can ask for the answer directly if they want. Wasn’t it fun?

Multimodal inputs

We can also send images to Gemini. Gemini can see the image and generate a response based on the image. Here is an example image. It is a picture of a duck carved from a pear. Let’s see what Gemini can do.

from PIL import Image

import matplotlib.pyplot as plt

duck_img_path = '../../img/duckpear.jpg'

duck_img = Image.open(duck_img_path)

plt.figure(figsize=(5, 5))

plt.imshow(duck_img)

plt.axis('off')

plt.show()

with open(img_path, 'rb') as f:

duck_img_bytes = f.read()

ask(prompt=[types.Part.from_bytes(data=duck_img_bytes, mime_type='image/jpeg'), 'What do you see in this image?'])In this image, I see a beautifully crafted fruit carving, most likely a pear, shaped into a swan or duck.

Here are the details:

- Main Subject: A light-colored fruit (appearing golden-yellow to light brown), intricately carved into the form of a swan or duck.

- The bird’s head and a long, gracefully curved neck are distinct, with a small beak and a dark, perhaps hollowed-out, “eye.”

- The body is made up of numerous thin, fanned-out slices of the fruit, layered to create a feathered or winged effect.

- Surface: The carved fruit rests on a rustic, aged wooden cutting board. The board has visible wood grain and some signs of wear, including a distinct crack or groove towards the bottom right.

- Background Elements:

- To the left of the carved fruit, part of a knife (with a dark handle and silver blade) is visible on the cutting board.

- Further back and to the right, another whole, round, brownish fruit or vegetable (possibly another pear or a potato) is out of focus.

- The very blurred background suggests an indoor setting, possibly a kitchen or dining area, with dark furniture or objects.

The overall impression is one of culinary artistry and delicate presentation.

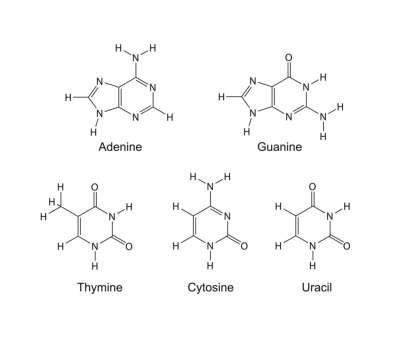

That was correct. It was probably too easy for it. Let’s do another image. It has five chemical structures that are found in DNA and RNA. Let’s see whether Gemini can recognize them.

from PIL import Image

import matplotlib.pyplot as plt

bases_img_path = '../../img/nitrogenous-bases.jpg'

bases_img = Image.open(bases_img_path)

plt.figure(figsize=(5, 5))

plt.imshow(bases_img)

plt.axis('off')

plt.show()

with open(bases_img_path, 'rb') as f:

image_bytes = f.read()

ask(prompt=[types.Part.from_bytes(data=image_bytes, mime_type='image/jpeg'), 'What do you see in this image?'])This image displays the chemical structures of five nitrogenous bases, which are fundamental components of DNA and RNA. Each structure is clearly labeled with its common name:

- Adenine: A purine base, characterized by a fused five-membered and six-membered ring system, with an amino group attached to the six-membered ring.

- Guanine: Also a purine base, featuring the same fused ring system as adenine but with an amino group and a carbonyl group.

- Thymine: A pyrimidine base, identified by a single six-membered ring with two carbonyl groups and a methyl group.

- Cytosine: A pyrimidine base, composed of a six-membered ring with one carbonyl group and one amino group.

- Uracil: A pyrimidine base, similar to thymine but lacking the methyl group, featuring a six-membered ring with two carbonyl groups.

The image is well-rendered with clear lines and labels, making the structures easy to distinguish.

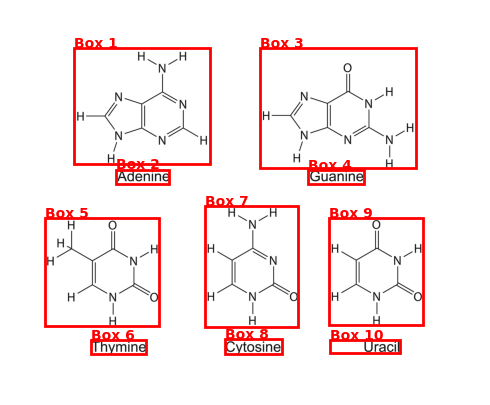

Gemini can also do object detection. Let’s try it on the images with chemical structures.

import json

prompt = "Detect the all of the chemical structures in the image. "

"Pay close attention to outermost atoms. The box_2d should be [ymin, xmin, ymax, xmax] normalized to 0-1000."

# This config sets the response type to be JSON

config = types.GenerateContentConfig(

response_mime_type="application/json"

)

r = send_msg(prompt=[bases_img, prompt], config=config)

width, height = bases_img.size

bounding_boxes = json.loads(r.text)

converted_bounding_boxes = []

for bounding_box in bounding_boxes:

abs_y1 = int(bounding_box["box_2d"][0]/1000 * height)

abs_x1 = int(bounding_box["box_2d"][1]/1000 * width)

abs_y2 = int(bounding_box["box_2d"][2]/1000 * height)

abs_x2 = int(bounding_box["box_2d"][3]/1000 * width)

converted_bounding_boxes.append([abs_x1, abs_y1, abs_x2, abs_y2])

print("Image size: ", width, height)

print("Bounding boxes:", converted_bounding_boxes)Image size: 4508 3695

Bounding boxes: [[617, 369, 1920, 1481], [1018, 1533, 1528, 1670], [2398, 365, 3890, 1518], [2862, 1540, 3399, 1670], [338, 1998, 1433, 3037], [775, 3166, 1307, 3299], [1875, 1884, 2763, 3040], [2060, 3159, 2605, 3299], [3060, 2002, 3958, 3026], [3065, 3170, 3737, 3295]]In this example, we set the response type as json to use structured output. We will go over more on that later. We got five bounding boxes from the response. It looks promising. Let’s plot the bounding boxes on the image.

import matplotlib.patches as patches

fig, ax = plt.subplots(1, 1, figsize=(5, 5))

ax.imshow(bases_img)

for i, bbox in enumerate(converted_bounding_boxes):

x1, y1, x2, y2 = bbox

rect = patches.Rectangle((x1, y1), x2-x1, y2-y1, linewidth=2, edgecolor='red', facecolor='none')

ax.add_patch(rect)

ax.text(x1, y1-5, f'Box {i+1}', color='red', fontsize=10, weight='bold')

ax.set_xlim(0, width)

ax.set_ylim(height, 0)

ax.axis('off')

plt.tight_layout()

plt.show()

That is pretty awesome. With this ability, it would be very convenient to create anki cards to study. For more image realted tasks such as segmentation, uploading files, etc. please refer to the Gemini documentation.

Text to speech

Let’s do some cool thing where we ask gemini to read text out loud. You can also try out this feature in the AI studio. We’ve been using Gemini 2.5-flash, but we will switch to “gemini-2.5-flash-preview-tts”. Here is Gemini reading a blog post on OMEGA: Can LLMs Reason Outside the Box in Math?.

import wave

from IPython.display import Audio

# Set up the wave file to save the output:

def wave_file(filename, pcm, channels=1, rate=24000, sample_width=2):

with wave.open(filename, "wb") as wf:

wf.setnchannels(channels)

wf.setsampwidth(sample_width)

wf.setframerate(rate)

wf.writeframes(pcm)

prompt="""Say cheerfully: Large language models (LLMs) like GPT-4, Claude, and DeepSeek-R1

have made headlines for their impressive performance on mathematical competitions,

sometimes approaching human expert levels or even exceeding it on Olympiad problems.

Yet a fundamental question remains: Are they truly reasoning or are they just recalling

familiar strategies without inventing new ones?""",

config=types.GenerateContentConfig(

response_modalities=["AUDIO"],

speech_config=types.SpeechConfig(

voice_config=types.VoiceConfig(

prebuilt_voice_config=types.PrebuiltVoiceConfig(voice_name='Kore')

)

),

)

r = send_msg(

prompt,

model="gemini-2.5-flash-preview-tts",

config=config,

)

data = r.candidates[0].content.parts[0].inline_data.data

wave_file('tts1.wav', data) # Saves the file to current directory

audio_file = 'tts1.wav'

display(Audio(audio_file))We can also create a dialogue with mutliple people in it.

prompt = """TTS the following conversation between Teacher and Student:

Teacher: "Alright class, today we're exploring Google's Gemini AI. Now, I know some of you think AI is just magic, but—"

Student: "Magic? Professor, I thought you said there's no such thing as magic in computer science. Are you telling me you've been lying to us this whole time?"

Teacher: chuckles "Touché, Sarah. Let me rephrase: Gemini might seem magical, but it's actually quite logical once you understand it. Think of it as a very sophisticated conversation partner."

Student: "A conversation partner that can also look at my photos, generate images, and call functions? Sounds like the kind of friend I need. Does it also do my homework?"

Teacher: "Well, it could help you understand concepts better. But let's start with the basics. Gemini uses something called 'system instructions' to set its behavior. It's like giving someone a role to play."

Student: "So if I tell it to be a pirate, it'll talk like one?"

Teacher: "Exactly! You could say 'You are a helpful pirate tutor' and it would explain calculus while saying 'ahoy matey.' The system instruction shapes its entire personality."

Student: "That's actually brilliant. So instead of getting boring explanations, I could have a Shakespeare character teach me physics?"

Teacher: "Now you're getting it! But here's where it gets interesting - Gemini can also process multiple types of input simultaneously. Text, images, audio, even video."

Student: "Wait, so I could show it a picture of my messy room and ask it to write a poem about entropy?"

Teacher: "Absolutely! That's called multimodal processing. But here's something even cooler - structured output. Instead of just getting text back, you can ask for specific formats like JSON."

Student: "JSON? You mean I could ask it to rate my terrible cooking and get back a proper data structure instead of just 'this looks questionable'?"

Teacher: laughs "Precisely! You could define a Recipe class with ratings, ingredients, and improvement suggestions. Very organized criticism."

Student: "Okay, but what about function calling? That sounds scary. Like, what if it decides to order pizza while I'm asking about math?"

Teacher: "Function calling is actually quite safe. You define exactly which functions it can use, like a toolbox. If you only give it a calculator function, it can't order pizza."

Student: "But what if I give it a pizza-ordering function?"

Teacher: "Then... well, you might get pizza. But that's on you, not the AI."

Student: "Fair point. What about this 'thinking' feature I heard about?"

Teacher: "Ah, that's fascinating! Gemini can show you its reasoning process. It's like seeing someone's rough draft before they give you the final answer."

Student: "So it's like looking at my brain when I'm solving a problem? That's either really cool or really terrifying."

Teacher: "More like watching a very organized person work through a problem step by step. You can even control how much thinking time it gets."

Student: "Can I give it infinite thinking time and see if it achieves consciousness?"

Teacher: "Let's not get ahead of ourselves. But you can set it to -1 for automatic thinking budget, which is pretty generous."

Student: "This is all great, but what about the practical stuff? Like, how do I actually use this thing?"

Teacher: "Simple! You get an API key, choose a model like gemini-2.0-flash, and start with client.models.generate_content(). The response comes back as text you can use immediately."

Student: "And if I want to have a longer conversation instead of just one-off questions?"

Teacher: "Use the chat interface with client.chats.create(). It remembers context, so you don't have to repeat yourself every time."

Student: "This sounds too good to be true. What's the catch?"

Teacher: "Well, you need to understand how to structure your requests properly. And like any tool, it's only as good as how you use it."

Student: "So basically, I need to learn how to talk to it properly?"

Teacher: "Exactly! Think of it as learning a new language - not programming language, but communication language. The better you get at asking questions, the better answers you'll get."

Student: "Alright, Professor, I'm convinced. When do we start building things with it?"

Teacher: "Right now! Let's start with a simple example and work our way up. Who knows? By the end of class, you might have your pirate physics tutor up and running."

Student: "Now that's what I call education!"""

config=types.GenerateContentConfig(

response_modalities=["AUDIO"],

speech_config=types.SpeechConfig(

multi_speaker_voice_config=types.MultiSpeakerVoiceConfig(

speaker_voice_configs=[

types.SpeakerVoiceConfig(

speaker='Teacher',

voice_config=types.VoiceConfig(

prebuilt_voice_config=types.PrebuiltVoiceConfig(

voice_name='Kore',

)

)

),

types.SpeakerVoiceConfig(

speaker='Student',

voice_config=types.VoiceConfig(

prebuilt_voice_config=types.PrebuiltVoiceConfig(

voice_name='Puck',

)

)

),

]

)

)

)

r = send_msg(

prompt,

model="gemini-2.5-flash-preview-tts",

config=config

)

data = r.candidates[0].content.parts[0].inline_data.data

wave_file('tts2.wav', data)

display(Audio('tts2.wav'))